Multimodal human-computer interaction technology - HUD, head up display system

1、 Overview

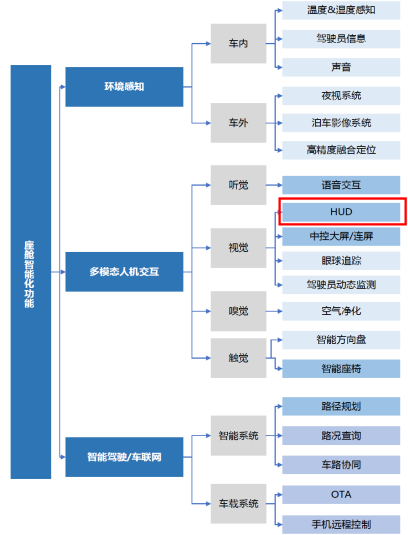

Intelligent Connected Vehicle (ICV) refers to a vehicle equipped with advanced onboard sensors, controllers, actuators, and other devices, and integrating modern communication and network technologies to achieve intelligent information exchange and sharing between vehicles, people, roads, clouds, and more. Under the trend of automotive intelligence, cabin intelligence is becoming increasingly prevalent, and intelligent cabins include the following functions:

As vision is the key to human-computer interaction, the importance of HUD (Head Up Display) is increasingly prominent. The core function of this system is to use an image generation unit to project various vehicle information to the driver's field of view through an optical imaging system, allowing the driver to read key information without having to lower their head, thereby reducing the number of times they lower their head, lowering the risk of "blind driving", and improving driving safety.

From the perspective of human-computer interaction, as a key technology in visual human-computer interaction, it can meet the needs of users in various scenarios such as safe driving, navigation, entertainment, and information exchange, providing users with a comprehensive human vehicle interaction experience. For example, in navigation scenarios, HUD can directly project navigation information in front of the driver's line of sight, eliminating the need for the driver to be distracted by the central control screen or mobile phone, improving driving safety and convenience.

From the perspective of market demand analysis, HUD is easily perceived by customers and has the characteristics of obvious functional perception, strong technological sense, and great visual impact. Consumers have a high willingness to pay for it. According to relevant surveys, consumers' willingness to pay for HUD functionality is as high as 30.2%, ranking among the top in various cabin functions. This indicates that HUD has great potential for development in the automotive market, as it can attract consumers to purchase vehicles equipped with this feature, thereby enhancing the market competitiveness of vehicles.

2、 The Development History and Classification of HUD

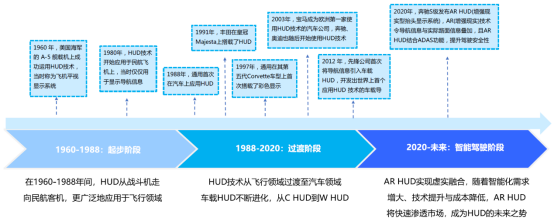

(1) Development history of HUD

The development process of HUD is quite long, originating from the aviation industry. In 1960, the head up display system, as the predecessor of HUD, was successfully applied to the American A-5 carrier based aircraft. It can project the aircraft's flight data onto the perspective mirror in front of the pilot's field of view, greatly improving flight safety and efficiency. Subsequently, between 1960 and 1988, HUD gradually transitioned from fighter jets to commercial aircraft, achieving a wider range of applications. In 1988, General Motors launched the first car equipped with HUD technology, the Oldsmobile Cutlass Supreme Indy 500 Pace Car, marking the transition of HUD technology from the aviation field to the automotive field. In the early stages of development in the automotive industry, in car HUDs were mainly based on C HUD (combination head up display), which had a small imaging area, displayed less information, and posed safety hazards, gradually being eliminated from the market. Subsequently, W HUD (windshield mounted head up display) became the main growth force in the in car HUD market due to its improved projection information volume, imaging area, and quality. In recent years, with the advancement of image generation technology and the development of wedge-shaped windshields, AR HUD (Augmented Reality Head up Display) has gradually entered the market and become a new development direction for HUD technology. In 2020, Mercedes Benz S-Class released ARHUD (Augmented Reality Head Up Display System). On Mercedes Benz's AR HUD system, AR technology allows virtual digital information to be directly overlaid with real-life roads. Class I important driving information, Class II auxiliary driving information, and Class III comfort information can all be integrated with the external reality and visually displayed in front of the driver, greatly improving driving safety, information readability, and comfort. Therefore, in the future, with the continuous improvement of consumers' demand for intelligent automotive experience and the continuous advancement of intelligence and AR optics, AR HUD experience will be enhanced and costs will be reduced. AR HUD is expected to quickly penetrate the market and become the future trend of HUD.

(2) Technical classification and principles

①. C HUD (Combination Head Up Display)

C HUD is the main form of early car mounted HUD, with an imaging area of a small piece of front facing resin semi transparent glass, a field of view (FOV) typically only 5 °× 1 ° -4 °, and a projection area of about 6-8 inches. This HUD structure is relatively simple and cost-effective. Even if it is not equipped by the original factory, it can be purchased and installed at a lower price in the secondary market, and vehicle information can be read through the OBD interface. However, the drawbacks of C HUD are also quite obvious, as its displayed content is very limited, mainly focusing on basic information such as vehicle speed and navigation, and the image is blurry. Due to the close proximity of imaging to the human eye, drivers need to frequently adjust the focal length of their eyes, which can easily cause visual fatigue and even lead to driving accidents. In addition, in the event of a vehicle collision, the resin glass of the C HUD may also cause secondary injury to the driver. Therefore, by 2024, except for a few models, the vast majority of car companies have abandoned the original C HUD.

② W HUD (windshield mounted head up display)

W HUD is the mainstream product in the current car HUD market, which uses optical components to reflect information onto the windshield. Compared with the C HUD, the imaging area of the W HUD has been expanded to include a portion of the front windshield, with a field of view angle of up to 10 °× 4 ° and a projection area of 7-15 inches. The imaging quality has also been significantly improved. In order to eliminate the problem of ghosting, the windshield of W HUD usually requires special design, made into a wedge-shaped shape with a thick top and a thin bottom, and adding PVB film between the two layers of glass to overlap the reflected images. At the same time, the bottom slot is used to place the display module, which puts higher requirements on the field of view. The larger the field of view, the richer the displayed range and information, but there are differences in information selection and UI design among different manufacturers. If the design is not good, the interface may appear cluttered and affect the user experience.

③ AR HUD (Augmented Reality Head Up Display)

AR HUD is an advanced form of HUD technology that utilizes AR optical imaging and virtual real fusion technology to project virtual information such as vehicle driving information and intelligent driving onto the front windshield, and combines it with real-time road conditions outside the vehicle to present drivers with virtual real integrated driving information and experience. The imaging area of AR HUD is larger, and more advanced PGU (Image Generation Unit) is applied. The field of view can be increased to 13 °× 5 ° and above, the projection area can be expanded to over 20 inches, and the Virtual Image Distance (VID) is also farther, far exceeding the performance of C HUD and W HUD.

In practical applications, AR HUD can achieve various functions. In navigation scenarios, it can provide real-time fusion navigation, directly integrating virtual information such as road guidance and arrow directions into the actual road surface, allowing drivers to obtain navigation information without changing their gaze; In ADAS assisted scenarios, AR HUD can visualize intelligent driving behavior, helping drivers better monitor the vehicle's operating conditions through virtual indicators such as lane keeping and following target recognition. At the same time, combined with real-life warning functions, it reduces the possibility of accidents occurring; In life and entertainment interest scenarios, with the development of automotive intelligence, AR HUD can upgrade the front windshield to a large display screen, meeting the needs of drivers in gaming, watching movies, social entertainment, and other aspects. It can also project interest information such as commercial areas and entertainment venues on the street, achieving interconnection with the outside scene of the car.

AR HUD includes multiple processes such as data collection, algorithm fusion, and imaging process.

Data collection

The front-end of AR HUD collects data through various devices, including cameras, various in car sensors, and collision warning, lane departure warning, pedestrian warning, and traffic sign recognition provided by ADAS assisted driving functions. At the same time, in car navigation also provides road navigation information for it. These devices are like the "eyes" and "ears" of AR HUD, sensing the surrounding environment and driving status of the vehicle in real time, providing basic data for subsequent information processing and display.

Algorithm fusion

The collected data is processed through software algorithms in the middle end. The software algorithm runs through the three stages of AR HUD input, execution, and output. At the input layer, it involves recognition, tracking, computer vision, and other algorithms related to ADAS. The more mature the ADAS technology, the higher the performance of the algorithm input layer; The execution layer utilizes sensor fusion, high-definition map integration, and prediction algorithms to quickly fuse and process the information collected by the front-end in milliseconds, effectively reducing image latency; The output layer utilizes algorithm technologies such as 3D rendering, image stabilization, distortion correction, real-time image adjustment, and virtual real fusion to project and output real-time information such as videos and images, improving the clarity and timeliness of image output and ensuring that the images seen by the driver are stable, clear, and accurate.

Imaging process

The backend of AR HUD uses the core component PGU image generation unit to project images using AR micro display scheme. The current mainstream AR micro display solutions include five types: TFT-LCD, DLP, LCoS, LBS laser, and Micro LED. Taking TFT-LCD as an example, its core component is the liquid crystal unit, which controls the transmittance of the liquid crystal to achieve brightness and darkness display of the image. After the LED backlight emits light, the TFT substrate generates voltage to control the arrangement direction of the liquid crystal cells, thereby affecting the amount of light penetration. In the image projection stage, combined with the application of freeform mirrors and windshield upgrades, imaging is achieved through three light reflections. The image generated by PGU is first projected onto a folding mirror for the first reflection, then magnified and reflected for the second time through a non spherical rotatable mirror, and finally emitted through a stray light trap to the windshield for the third reflection, entering the driver's eyes and forming a virtual image that blends with the real outside of the car, presenting an AR effect of virtual real fusion.

3、 Application of HUD by various host manufacturers

(1) The Application of Ideal Cars in HUD

Ideal cars have certain representativeness in the application of HUD. Its display content is extremely rich. Real time display of basic driving data such as vehicle speed and gear, allowing drivers to have a clear understanding of the vehicle's operating status. The HUD screen is generally divided into three areas: left, center, and right. Under normal driving conditions, the left displays regular navigation information, while the lower displays the estimated arrival time; Synchronize the display of partial images with the central control screen in the middle; The right side displays information such as speed, speed limit, driving status gear, and current time. In terms of intelligent driving environment, with the help of 3D spatial perspective technology, dynamic information such as pedestrians, vehicles, road boundaries, and obstacles are overlaid on the real field of view with semi transparent layers, while integrating information such as autonomous driving status, speed limits, and weather warnings. After activating the digital rearview mirror imaging function, turn on the turn signal and the HUD will display the side image, reducing the risk of blind spots in the field of view.

Ideal to effectively control the ghosting deviation and curved mirror surface shape of the front windshield through advanced technology, and eliminate ghosting problems. The 2.5-meter short distance projection and -3.7 degree golden viewing angle design ensure clear visibility and prevent visual fatigue. It can also automatically adjust brightness according to external light and has a snow mode to cope with special environments.

In addition, HUD is deeply integrated with the vehicle's infotainment system. Drivers can conveniently operate the display content and function settings of HUD through the safety interactive screen or voice control. And with the OTA upgrade of the car's infotainment system, the HUD function continues to be optimized and expanded, and its practicality and intelligence continue to improve, bringing users a better driving experience.

(2) HUD application of Xiaomi cars

① HUD application

In the early days of Xiaomi, the HUD of Max mainly focused on map navigation, with navigation information occupying about 80% of the screen. In terms of displaying key information, in addition to conventional navigation instructions, it also added remaining battery life information. The brightness performance of this HUD is outstanding, with a maximum brightness of 13000 nits, ensuring that the driver can read information clearly even in strong light environments, effectively solving the problem of some HUDs being difficult to see in sunlight.

Xiaomi's HUD is also very impressive in terms of interaction design. It supports partition block display, such as displaying navigation on the left, vehicle speed in the middle, and music lyrics on the right. It also allows drivers to customize the display mode, highlighting key information such as RPM and G-value in sport mode. At the same time, the HUD position can be automatically adjusted according to the driver's position, providing the most suitable viewing angle for different drivers. Not only that, the co pilot can also use HUD to watch movies, watch short videos, etc. while parking (the driver's seat is not affected during the driving process), adding fun to the riding experience.

② PHUD application

PHUD was first proposed by BMW. According to spy photos, there is a certain probability that the Xiaomi YU7's panoramic display HUD is equipped with PHUD, which transforms the lower half of the entire front windshield into a "giant display screen", officially known as the "panoramic view bridge". By applying a dark coating underneath the front windshield, a full screen display of vehicle speed, navigation, music, driving assistance information, and more from left to right is achieved, resembling a "fish screen".

From the display content, the navigation map directly covers the driver's eyes, and the turning guidance at the intersection is clear at a glance. The driver does not need to tilt their head to check the central control screen, greatly improving driving safety and convenience. At the same time, the relevant information of the intelligent driving system, such as the current lane keeping situation, the distance of the preceding vehicle, and lane change suggestions, can also be presented in real time. This intuitive display method is more in line with driving intuition and reduces the risk of misjudgment. In addition, when the turn signal is turned on, the HUD can directly display real-time images of the side and rear, and provide timely warning reminders when pressing the line or getting too close to the car, like a caring driving school coach accompanying you at all times.

(3) AR HUD application of Wenjie M9

In addition, the AR HUD of the WENJIE M9 also features dynamic lane guidance arrows and even incorporates Kunpeng dynamic effects. It also supports entertainment functions such as watching movies while parked, providing drivers and passengers with a richer experience.

The intention of intelligent driving is visually presented through MOP lines, perfectly fitting with the real physical world, allowing drivers to clearly grasp the state of intelligent driving. Integrating body camera images and reversing images allows drivers to fully perceive the surrounding conditions of the vehicle without having to shift their gaze. In terms of navigation, it integrates multidimensional information and lane line trends to provide accurate lane level AR navigation, making travel routes clear at a glance. In the context of intelligent driving, there are also warning prompts that are in line with physical objects, helping drivers detect danger in advance. In addition, common information such as traffic light countdown, safety assisted driving, and night vision/rain fog enhancement prompts are also readily available.

The distinctive features are full of highlights. It supports Kunpeng dynamic effect, where the direction of Kunpeng swimming is the direction of vehicle movement, adding a sense of ritual and fun to driving. In the parked state, a cinema level giant screen image of 75 inches or even up to 75 inches at a distance of 8.5 meters can be projected in the front view, providing a unique viewing experience for drivers and passengers.

The display effect is equally excellent. Adopting the LCOS solution, it achieves equivalent display effects of 70 inches at 7.5 meters and 96 inches at 10 meters, with the world's largest viewing angle of 13 °× 5 ° for mass-produced models. The screen resolution is as high as 1922 × 730, ensuring clear and delicate images. The in eye brightness reaches 12000 nits, and the image remains stable and clear even under strong light; The contrast ratio reaches 1200:1, and the image is dynamic and clear.

In terms of improving driving experience, the AR HUD of the WENJIE M9 also performs excellently. The VID (Visual Information Distance) is much greater than 7 meters, allowing the driver's driving field of view to be in focus with the AR HUD information, without the need for frequent focus switching, effectively reducing driving fatigue. Deeply integrated with advanced intelligent driving systems, it further enhances drivers' intuitive understanding of vehicle dynamics and enhances their trust and sense of security in intelligent driving functions.

4、 Future development direction

With the continuous development of technology, the optical display effect and algorithm technology of AR HUD will continue to be optimized. In terms of optical design, optical waveguide technology is expected to make breakthroughs. At present, optical waveguide technology, as a key technology to solve the problem of achieving both volume and FOV (field of view) in AR HUD, is receiving widespread attention. It couples the image in and out through an optocoupler, and can directly output the original complete image without multiple reflections. It can achieve a large FOV while making the HUD body thinner and lighter. In the future, with the maturity of optical waveguide technology, its manufacturing difficulty will be reduced and yield will be improved, thereby promoting the further development of AR HUD.

In terms of algorithm technology, more emphasis will be placed on the accuracy and timeliness of data processing, as well as the clarity, hierarchy, and aesthetic stability of content display and rendering. By optimizing algorithms, reducing image latency, improving the effect of virtual real fusion, reducing driver visual fatigue, and enhancing the overall performance of AR HUD.

Meanwhile, due to the relatively high cost of AR HUD currently, this has to some extent limited its widespread adoption. In the future, with the advancement of technology and the expansion of mass production scale, the cost of AR HUD is expected to gradually decrease. On the one hand, the continuous upgrading of micro display technology will drive down costs, such as the optimization of TFT technology in terms of cost-effectiveness and the improvement of DLP technology in cost control; On the other hand, with the continuous improvement of the industrial chain and the rise of local supply chains, the cost of raw material procurement and production will be effectively controlled, making the price of AR HUD more competitive and able to enter the civilian market, increasing market penetration.

Note: The data and images cited in the article are sourced from the internet