End to end model of autonomous driving: Technological Evolution, Application Practice, and Future Prospects

1、 The development of autonomous driving technology and the rise of end-to-end models

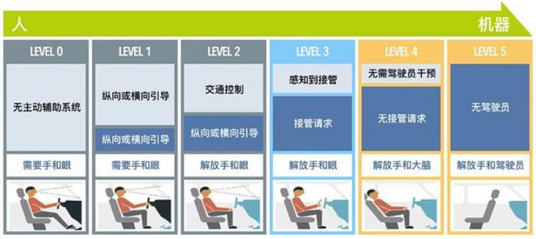

The development of autonomous driving technology is a constantly evolving process, gradually moving from early simple assisted driving functions to highly automated or even fully autonomous driving. Early assisted driving systems, such as anti lock braking system (ABS) and electronic stability control system (ESC), were primarily designed to enhance driving safety, with control of the vehicle still firmly in the hands of the driver. But with the continuous advancement of sensor technology, computer computing power, and algorithms, functions such as adaptive cruise control (ACC) and lane departure warning (LDW) have emerged one after another, and vehicles have begun to have a certain degree of automation operation capability.

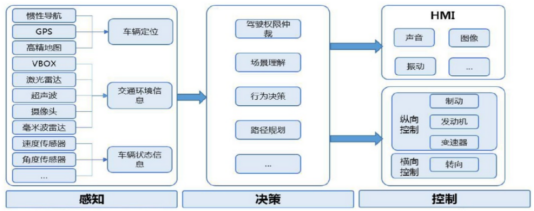

In this development process, traditional autonomous driving technology often adopts a multi module architecture. The perception module is responsible for collecting information about the surrounding environment, such as vehicles, pedestrians, road signs, and obstacles; The decision planning module analyzes and processes perceptual data to develop driving strategies, including path planning, speed control, and avoidance decisions; The execution module controls the vehicle's steering, acceleration, braking, and other actions based on the instructions of the decision planning module.

However, traditional multi module architectures have many problems. With the increasing complexity of autonomous driving scenarios, the interaction and coordination between various modules have become increasingly difficult. In the perception module, there are significant differences in data formats and accuracy among sensors such as cameras, millimeter wave radar, and LiDAR, making data fusion a challenge. The decision planning module needs to comprehensively consider many factors such as traffic rules, road conditions, and vehicle dynamics limitations to formulate decisions that are both safe, comfortable, and efficient, which requires extremely high algorithm complexity and computing power. Moreover, errors between modules are prone to accumulate, and small errors in the perception module may be amplified in subsequent modules, affecting the overall performance of the system.

The end-to-end autonomous driving model was born in this context. It breaks the traditional boundaries of multiple modules and directly uses data collected by vehicle sensors, such as camera images, radar point cloud data, etc., as inputs to output vehicle control commands, such as steering wheel angle, throttle opening, and braking force. The inspiration for this architecture comes from the human driving process, where humans do not precisely analyze every visual element while driving, but quickly make decisions based on long-term accumulated driving experience. The end-to-end model aims to use deep neural networks to learn the direct relationship between scenarios and driving actions from a large amount of driving data, achieving more concise and efficient autonomous driving control.

2、 Advantages and disadvantages of end-to-end models

(1) The advantages of end-to-end models

①. A concise architecture will reduce the overall complexity of the control system

The traditional auto drive system adopts a multi module architecture, including several relatively independent modules such as perception, decision planning and execution. Complex information exchange and coordination are required between various modules, which not only increases the difficulty of system design and development, but also makes it easy for problems to arise at the junctions between modules. For example, when the object information recognized by the perception module is transmitted to the decision planning module, decision errors may occur due to issues such as data format conversion and information loss.

The end-to-end model greatly simplifies this process by directly taking sensor collected data (such as camera images, radar point cloud data) as input, processing it through neural networks, and directly outputting vehicle control commands (such as steering wheel angle, throttle and brake control). This architecture avoids the tedious interaction process between multiple modules, reduces possible errors in intermediate links, and makes the overall system more concise and efficient. Taking Nvidia's early end-to-end autonomous driving model as an example, it uses a simple convolutional neural network structure to directly convert road scene images captured by cameras into vehicle steering control commands, reducing the complexity of intermediate steps such as object detection and path planning in traditional architectures and lowering the complexity of the system.

②. Strong learning ability to adapt to complex scenarios

Deep neural networks endow end-to-end models with powerful learning capabilities, enabling them to automatically learn complex driving patterns and scene features from large amounts of driving data. Unlike traditional rule-based driving systems, end-to-end models do not require detailed driving rules to be manually formulated. They can discover patterns and patterns that are difficult to describe with rules through learning from massive driving scenario data.

In complex urban traffic scenarios, there are various traffic participants and dynamically changing road conditions, such as pedestrians suddenly entering the road, vehicles changing lanes at will, etc. The end-to-end model can make reasonable responses to these complex situations by learning a large amount of data similar to the scenario. Tesla's Full Self Driving (FSD) system adopts an end-to-end architecture, combined with a large amount of actual driving data for training, enabling vehicles to flexibly respond to various emergencies and make appropriate driving decisions like experienced drivers when facing complex urban road conditions.

③. Data driven, easy to optimize and improve

The end-to-end model is data-driven, and with the continuous increase and optimization of training data, the performance of the model can continue to improve. Researchers can improve the adaptability and accuracy of the model in various situations by collecting more driving data from different scenarios, including various weather conditions, road types, and driving behaviors, allowing the model to learn a wider range of knowledge.

If the model performs poorly in certain specific scenarios, such as deviating from the judgment of the distance between the preceding vehicle on a rainy highway, more data can be collected specifically for rainy highway scenarios to retrain or fine tune the model. This data-driven optimization approach is relatively flexible and does not require large-scale modifications to the entire system architecture, reducing the cost of optimization and improvement. Many autonomous driving companies have established large data collection and annotation teams, constantly collecting new data to train and optimize end-to-end models to improve their performance and safety.

④. Good real-time performance and fast response speed

Real time performance is crucial in autonomous driving. End to end models typically adopt efficient neural network architectures and utilize powerful hardware acceleration devices (such as GPUs, ASICs, etc.) for computation. They can process input sensor data in a short amount of time and quickly output vehicle control instructions. Taking convolutional neural networks as an example, their local connections and weight sharing characteristics greatly reduce computational complexity and improve processing speed. During high-speed driving, the end-to-end model can quickly respond to sudden obstacles ahead, issue timely braking or avoidance commands, and avoid collision accidents. Compared to traditional multi module systems, end-to-end models have significant advantages in real-time performance due to reduced information transmission and processing time between modules, which can better meet the strict response speed requirements of autonomous driving.

(2) Disadvantages of end-to-end models

①. Difficulties in data acquisition and annotation

The performance of end-to-end models is highly dependent on a large amount of high-quality data, and data acquisition faces many challenges. Collecting data covering various road conditions, weather conditions, and driving scenarios requires significant human, material, and time costs. To obtain driving data in extreme weather (such as rainstorm, snowstorm, dense fog), it is not only necessary to conduct long-term tests in corresponding weather conditions, but also to ensure the safety and stability of the test vehicles and equipment.

Data annotation is also a tricky problem. Labeling not only requires accurate labeling of vehicle control parameters (such as steering wheel angle, throttle opening, braking force), but also detailed labeling of various elements in the scene, such as different types of obstacles, meanings of traffic signs, constraints of traffic rules, etc. Inaccurate or incomplete labeling can directly affect the training effectiveness of the model, leading to incorrect decisions in practical applications.

②. The model has poor interpretability and is difficult to trust

The black box nature of deep neural networks makes the decision-making process of end-to-end models difficult to understand. After training, the model undergoes complex neural network calculations from input sensor data to output vehicle control instructions, making it difficult to intuitively explain why the model makes a specific decision. In practical applications, this lack of interpretability has caused significant problems. When a model makes incorrect decisions, it is difficult to determine whether it is due to issues with the model architecture, deviations in training data, or other reasons, making it difficult to effectively improve and optimize the model.

③. Limited generalization ability, difficult to adapt to new scenarios

Although end-to-end models perform well in scenarios covered by training data, their generalization ability faces challenges when encountering unseen new or extreme scenarios. The road rules and traffic environment vary greatly in different regions, and the knowledge obtained by training the model in one region may not be directly applicable to other regions. In some special road construction scenarios or rare weather combinations, the model may make misjudgments or fail to make decisions. This is because the model did not learn the features and coping strategies of these specific scenarios during the training process. The generalization ability of end-to-end models is insufficient, which limits their wide application in the complex and ever-changing real world. More technical means and data augmentation methods are needed to improve their ability to cope with new scenarios.

④. Lack of clear security mechanisms

In the traditional multi module architecture of autonomous driving, each module can be designed and validated for its specific functionality in terms of safety. In the perception module, multiple sensor redundancy methods can be used to improve the reliability of perception; In the decision planning module, strict safety rules and constraints can be established to ensure the rationality of decisions. The end-to-end model, due to its integrated structure, is difficult to establish clear security mechanisms like traditional architectures. Once the model encounters anomalies during training or operation, it is difficult to detect and correct them in a timely manner. Moreover, due to the difficulty in explaining the decision-making process of the model, it is also difficult to effectively assess and prevent some potential security risks. This poses significant security challenges for end-to-end models and requires further exploration of new security technologies and methods.

3、 The technical cornerstone of end-to-end models

(1) Neural Network Architecture

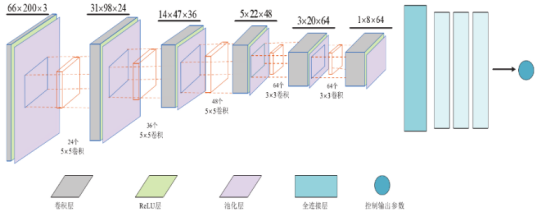

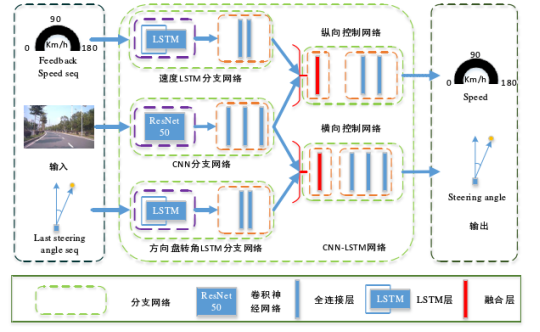

①Convolutional Neural Networks (CNNs) play a crucial role in end-to-end model processing of image data. Its local connection and weight sharing characteristics not only significantly reduce model training parameters and improve training efficiency, but also effectively extract local features of the image. Taking Nvidia's early end-to-end autonomous driving model as an example, the model adopts a multi-layer CNN architecture. The bottom convolutional layer slides on the image through convolutional kernels to extract low-level features such as edges and textures. As the network hierarchy deepens, it gradually recognizes high-level target object features such as lane lines, vehicles, and pedestrians. The pooling layer downsamples the feature map output by the convolutional layer, reducing data dimensionality and computational complexity while preserving key features. After multiple convolution and pooling operations, the image data is transformed into feature vectors suitable for fully connected layer processing, providing a basis for the model to predict vehicle control parameters.

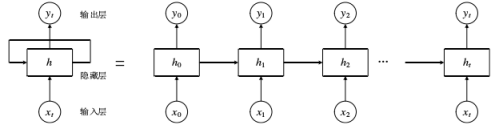

②. Recurrent Neural Networks (RNNs) and their variants, RNNs are designed to process data with time series characteristics and can mine temporal dependencies in the data. In autonomous driving scenarios, vehicle travel is a continuous time series, and current driving decisions rely on past scene information, such as adjusting the steering wheel angle based on the previous driving trajectory and current vehicle speed when turning. RNN introduces feedback connections in the hidden layer to enable the model to remember information from previous moments and combine it with the current input, thus better processing time series data.

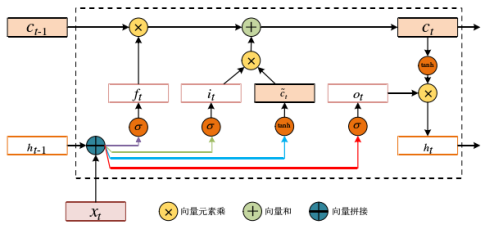

However, traditional RNNs suffer from gradient vanishing and exploding problems during training, and perform poorly when dealing with long sequence data. Long Short Term Memory (LSTM) networks address these issues by introducing gating mechanisms. The input gate controls the amount of new information entering the memory unit, the forget gate determines the discarding of old information in the memory unit, and the output gate determines the output content of the memory unit. This gating mechanism enables LSTM to better remember long-term information.

In end-to-end autonomous driving models, LSTM is commonly used to process multi frame image features in video sequences. When changing lanes, LSTM can more accurately predict the steering angle and speed adjustment strategy that should be adopted based on the position and speed information of adjacent lane vehicles and the driving status of the vehicle in the previous few frames, achieving safe and smooth lane changing operations.

③. Transformer model: The core of Transformer architecture is the attention mechanism, which can dynamically calculate the relationship weights between input sequence elements, focus on important information, and ignore secondary information. In the field of autonomous driving, when processing multi camera image data, Transformer can simultaneously focus on key targets from different camera perspectives, such as vehicles ahead, pedestrians, traffic signs, etc., to avoid interference from irrelevant information. Tesla's FSD system is a typical case of applying Transformer. In this system, Transformer is used for feature transformation from image space to vector space. Through the Self Attention mechanism and spatial encoding, the features at each position in the vector space are a weighted combination of the features at all positions of the image, and this weighted combination process is automatically learned without the need for manual design. The multi head attention mechanism of Transformer further enhances its feature capture ability, allowing different heads to focus on different aspects of input data, enabling the model to comprehensively understand driving scenarios and make more accurate decisions.

(2) Multi sensor fusion technology

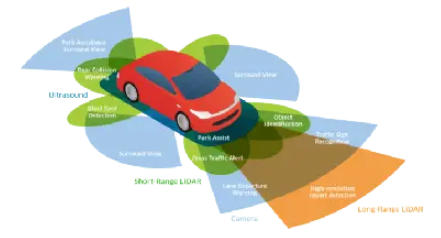

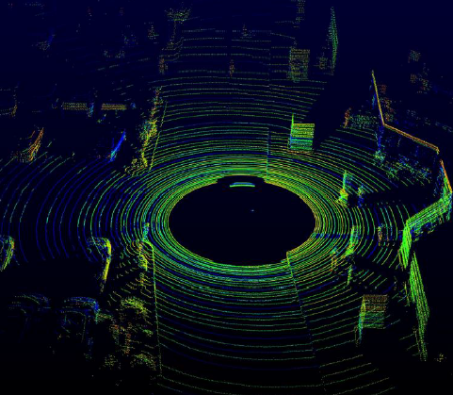

①. Sensor type and characteristics: In the auto drive system, multiple sensors work together to provide environmental awareness for the vehicle. Cameras can provide rich visual information that helps identify target objects such as road signs, lane markings, and other vehicles, but are greatly affected by lighting and weather conditions. The visible range decreases in the dark, and images under strong light are prone to overexposure. In severe weather conditions such as rain, snow, and fog, the imaging quality seriously deteriorates, and even cannot function properly. Millimeter wave radar measures the distance, velocity, and angle information of target objects by transmitting and receiving millimeter wave signals. It is not affected by lighting and has strong penetration ability for fog, smoke, and dust. However, its ability to obtain shape and texture information of objects is limited, and it can only provide the general position and motion status of target objects, making it difficult to accurately classify and identify objects. Lidar constructs a three-dimensional point cloud map of the surrounding environment by emitting a laser beam and measuring the reflected light time. It has extremely high distance resolution and angular resolution, and can accurately measure the position and shape information of target objects. It has strong anti-interference ability, but high cost, and its performance is affected in extreme weather conditions such as heavy rain and fog. The laser beam is scattered by water droplets or fog, resulting in a decrease in measurement accuracy.

②. Multi sensor fusion strategy: In order to give play to the advantages of different sensors and make up for the shortcomings, multi-sensor fusion technology is widely used in automatic driving. The early post fusion strategy started with each sensor independently processing data and then fusing the results, which was relatively simple to implement and easy to debug and optimize. However, it was prone to losing detailed information in the original data because each sensor may compress and simplify the data when processing it independently, resulting in important information loss.

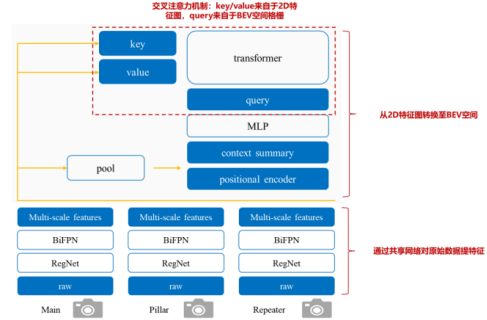

With the development of technology, feature level fusion has become the mainstream strategy. The BEV (bird's-eye view)+Transformer architecture is a typical representative of feature level fusion. Taking Tesla as an example, the company utilizes Transformer's cross attention mechanism for BEV spatial transformation, integrating image data collected by multiple cameras to provide more comprehensive and accurate environmental information, enhancing the model's perception ability of complex scenes. In the BEV space, the model can more intuitively understand the traffic conditions around the vehicle, providing a more reliable basis for end-to-end model decision-making. Feature level fusion achieves a good balance between data loss and computational power consumption. Compared with previous fusion methods, it has lower computational power consumption and can retain more original data information, improving model performance and efficiency.

4、 Tesla end-to-end auto drive system

The pure visual FSD (Full Self Driving) system showcased by Tesla at AI Day 2021 is another typical case of end-to-end models in practical applications. Although the system can currently only achieve L2 level autonomous driving, it performs well among similar systems. Tesla's visual perception system uses two different ways to transform information in image space into information in vector space. The first method is to first complete all perceptual tasks in the image space, and then map the results to the vector space; The second approach is to first convert image features into vector space, then fuse features from multiple cameras, and finally complete all perception tasks in vector space. After practice and research, Tesla has chosen the second approach as the core idea of the FSD perception system. This is mainly because in the first approach, due to the presence of perspective projection, the perception results that appear good in the image have poor accuracy in vector space, especially in long-distance areas; Moreover, in a multi camera system, due to the limited field of view of a single camera, it may not be possible to see the complete target, resulting in information loss.

In order to implement the core idea of FSD, Tesla needs to address two important issues: how to transform features from image space to feature space; The second is how to obtain annotated data in vector space. Tesla has adopted three key techniques in transforming features from image space to feature space. Firstly, establish the correspondence between image space and vector space through Transformer and Self Attention. In this correspondence, the features at each position in the vector space can be regarded as a weighted combination of all the features at that position in the image, and this weighted combination process is automatically implemented through Self Attention and spatial encoding, without the need for manual design, and is fully learned end-to-end according to the task that needs to be completed. Secondly, considering that in mass production applications, the calibration information of the cameras on each vehicle is not exactly the same, this can lead to inconsistencies between the input data and the pre trained model. To address this issue, Tesla has proposed two methods. A simple approach is to concatenate the calibration information of each camera, encode it through MLP, and then input it into the neural network. A better approach is to calibrate the images from different cameras using calibration information, so that the corresponding cameras on different vehicles output consistent images, which can improve the generality and accuracy of the model. Finally, in order to increase the stability of the output results, better handle occluded scenes, and predict the motion of the target, Tesla uses video multi frame input to extract temporal information. During this process, the vehicle's own motion information was also incorporated to support the neural network in aligning feature maps at different time points. In terms of temporal information processing, the FSD system adopts RNN technology, which processes multi frame image features and vehicle motion information through RNN to more accurately predict the target's motion trajectory and behavior.

In terms of obtaining annotated data in vector space, Tesla uses images from multiple cameras to reconstruct 3D scenes and annotate them in the 3D scene. The annotator only needs to annotate once in the 3D scene to see the real-time mapping of the annotation results in various images, making it easy to make corresponding adjustments. During the model training process, Tesla used a large amount of actual driving data, which covered various road conditions, weather conditions, and driving scenarios. In order to improve training efficiency and model performance, Tesla may have adopted a distributed training approach, using multiple computing devices to train simultaneously. The large-scale dataset is divided into multiple subsets and trained on different devices, and then the training results on each device are integrated through techniques such as parameter synchronization. In addition, Tesla may have applied transfer learning technology to transfer the model parameters trained on a certain task or dataset to the training of the FSD system as initial parameters for fine-tuning. This can utilize existing knowledge and experience, reduce training time and data requirements, and improve the model's generalization ability. Through the application of these training methods and techniques, Tesla's FSD system is able to make more accurate decisions in complex driving scenarios.

5、 The future development direction of end-to-end models

(1) Reinforcement learning and end-to-end model fusion

Reinforcement learning interacts with the environment through intelligent agents, learns optimal strategies based on reward mechanisms, and integrates with end-to-end models to enhance their decision-making abilities. Introducing reinforcement learning on the basis of end-to-end models, the model can continuously try and explore during the driving process, obtain reward or punishment signals based on driving results, and optimize decision-making strategies. In complex intersection traffic scenarios, the model can use reinforcement learning to try different traffic orders and speed choices, accumulate experience, improve decision-making ability in dealing with complex scenarios, and make autonomous driving decisions more flexible and intelligent.

(2) Continuously optimizing model architecture and training algorithms

Researchers are constantly exploring new model architectures and training algorithms to improve end-to-end model performance. Develop more efficient neural network architectures, reduce the number of parameters, improve computational efficiency, reduce training time and hardware costs, while enhancing model accuracy and generalization ability. Improve training algorithms, such as using adaptive learning rate adjustment, more effective regularization methods, etc., to prevent model overfitting, improve training stability and convergence speed, and enable the model to learn better under limited data, making it more suitable for practical application needs.

(3) Expanding application scenarios by combining vehicle road collaboration technology

Vehicle road collaboration technology achieves information sharing through communication between vehicles and infrastructure. The end-to-end model combined with vehicle road collaboration can obtain more road information, such as road conditions ahead, traffic signal status, etc., expanding application scenarios. Road infrastructure can send congestion information and construction area information to vehicles in advance, and end-to-end models can adjust driving decisions based on this, plan routes or adjust vehicle speeds in advance, improve traffic efficiency, enhance adaptability and safety in complex urban traffic environments, and promote the development of autonomous driving technology from single vehicle intelligence to networked intelligence.

Note: The data and images cited in the article are sourced from the internet